- Published on

- ·3 min read

The AGI Misunderstanding: Why LLMs Can't Think of a Number

- Authors

- Name

- هاني الشاطر

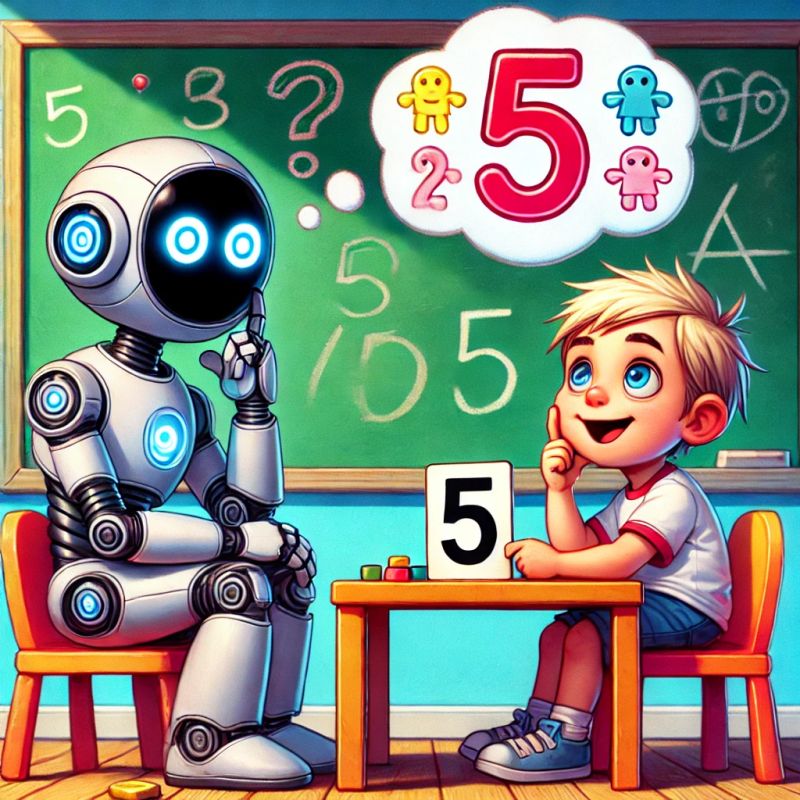

I opened LinkedIn this morning after a relaxing weekend, and everyone seems to be freaking out about one thing: AGI. I want to clear up this misunderstanding, but first, let's play a game. My daughter actually wanted to try this game with Gemini, and it really shows something important.

Let's do this together.

- Think of a number.

- Take from your friend the same number.

- Take from me 10.

- Throw half of the sum in the sea.

- Return to your friend his number.

Now, tell me: what number do you have left? You should have 5. The game was rigged, but it illustrates a crucial point, imagine we play this game with any new AI, now, here's my challenge to the AGI believers: what number did your supposed AI, initially think of?

The answer, of course, is none. LLMs can't "think" of a number, they can just predict the next tokens. Boom!

This simple game exposes a fundamental truth about the LLMs: they are, at their core, sophisticated next-token prediction engines. Sure, they can mimic human language remarkably well, creating text that often seems intelligent. We can boost their performance with all sorts of tricks, but under the hood, they're still just predicting the next most likely word.

If an AI, can't even engage in the basic act of "thinking" of a number, how could It possibly form a genuine opinion? Forming an opinion requires a perspective, a subjective viewpoint shaped by experience, internal values, and a sense of self. LLMs possess none of these.

The ability to form opinions is essential for navigating complicated problems. We often confuse these with complex problems. Complex problems have many moving parts but are solvable with enough data and computational power – AI's forte. Complicated problems, on the other hand, involve ambiguity, nuanced context, and require us to create a new value based on a unique perspective. These are the human problems, the messy, social, and cultural challenges that don't have a single right answer, only the answer that rings true to our individual point of view. AI may solve complex problems, but complicated ones? Not a chance.

When you look at the science of how our brains work – neuroscience and cognitive science – it becomes clear that the linear attention mechanism used by transformers is limited. Human cognitive system is far more complicated than linear attention machines, it could be even mind blowing when you dive into details of cognition and consciousness and how it links to our biology.

I'm not saying AI is useless. Machines already outperform us in many areas: recalling information, raw computation, mastering games like Go, and countless other tasks. We should absolutely celebrate advancements in AI, but we need to be realistic about their limitations. Until my AI can genuinely "think of a number," or grasp the concept of desire, the dream of AGI will remain just that – a dream.

Related Posts

Agent Autonomy - Part 2: Going Beyond Algorithms

Educational demos, marketing materials, and creative work—problems without a mathematical harness. Part 2 of the Agent Autonomy series shows how orchestrated agent evolution can solve subjective problems through skill-based guidance and multiple independent evaluators.

The Love-Prompt of Devesh the Octopus

Devesh ran a shady octopus meat caravan in the Simulation. Top agent, deep cover. Eight tentacles, eight side hustles. A story about love, AI, and taxes.

Agent Autonomy - Part 1: How to solve algorithmic problems

Agent autonomy isn't for everything. But for a specific slice of work—bounded problems that demand intelligence—it's exactly what you need. Algorithms, articles, demos, education materials, trip plans, webpages. Here's how to recognize when to stop directing and start hiring.